Preprint

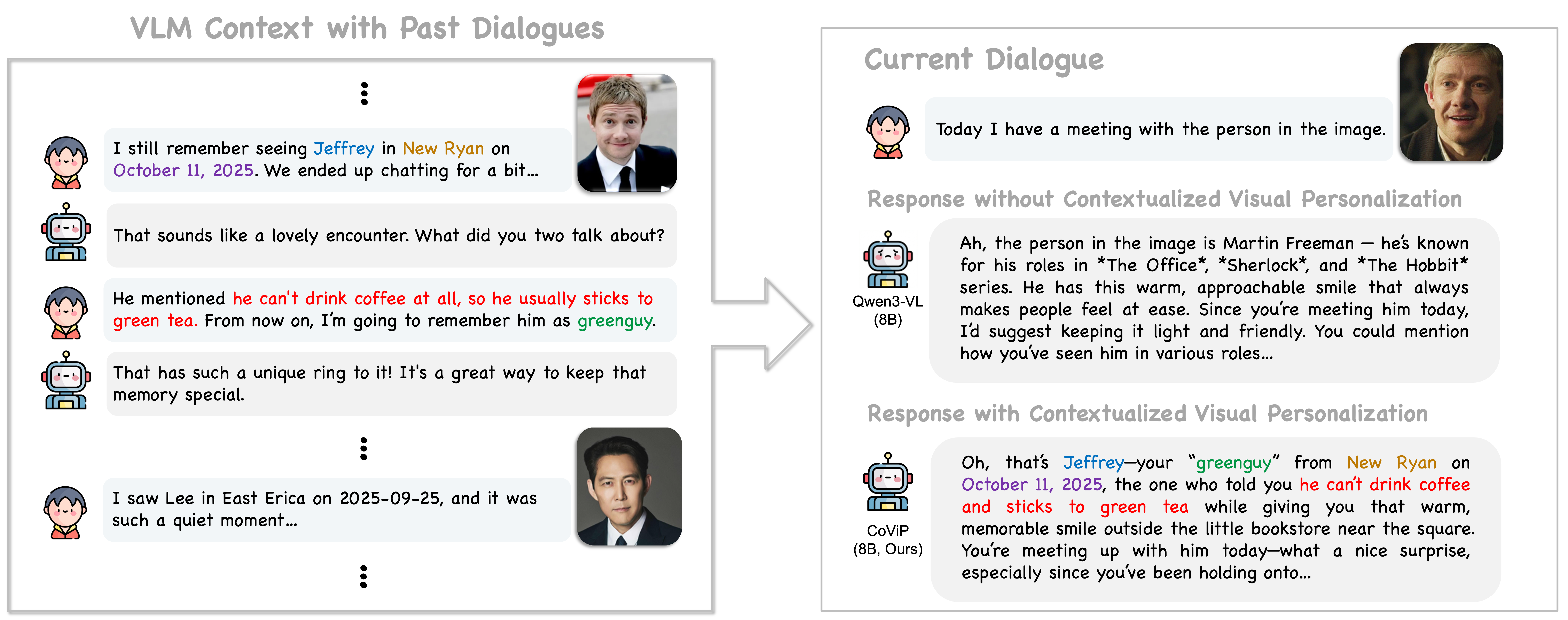

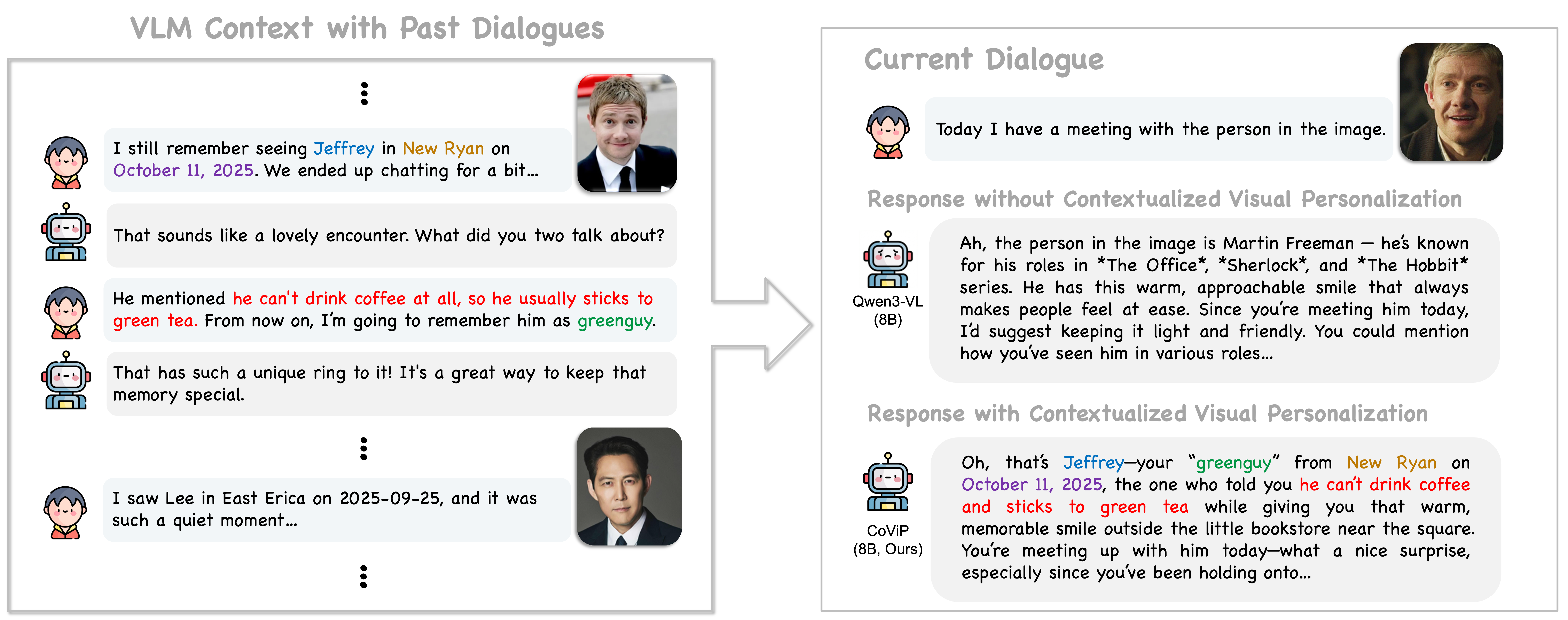

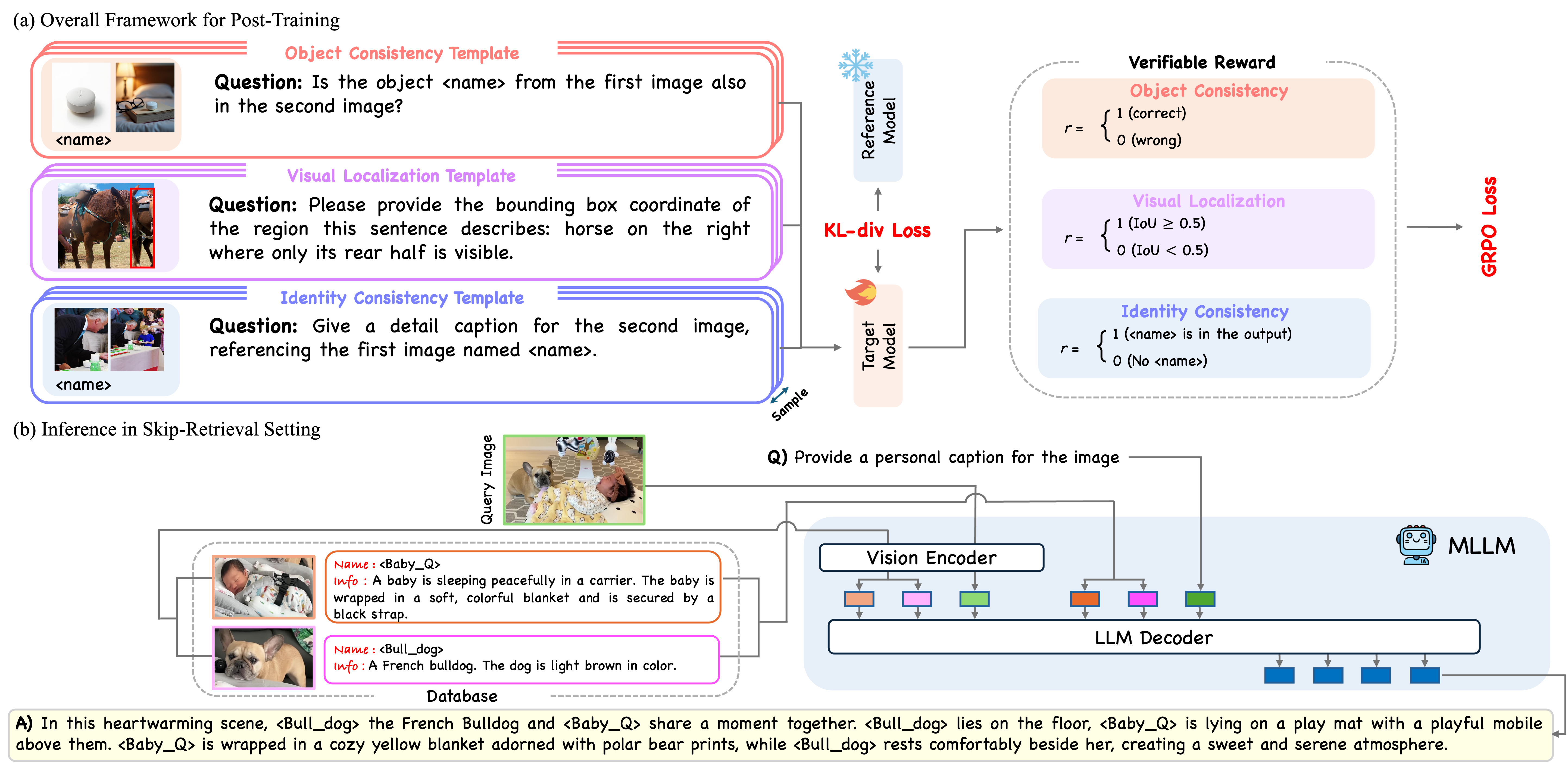

We introduce CoViP, a unified framework for contextualized visual personalization in VLMs, featuring a novel personalized image captioning benchmark, an RL-based post-training scheme, and diagnostic downstream personalization tasks.

Hi, I'm a fourth-year Ph.D. candidate in ECE at Seoul National University, working in the DSAIL Lab. I research computer vision and multi-modal reasoning. My work primarily explores post-training of generative models. I'm deeply interested in advancing multi-modal AI systems in more expressive and personalized ways.

I received my B.S. (2018) and M.S. (2020) degrees in Mechanical Engineering from Seoul National University. In 2021, I served as a Military Science and Technology Researcher at the AI R&D Center of the Korea Military Academy.

Preprint

We introduce CoViP, a unified framework for contextualized visual personalization in VLMs, featuring a novel personalized image captioning benchmark, an RL-based post-training scheme, and diagnostic downstream personalization tasks.

The IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), 2026

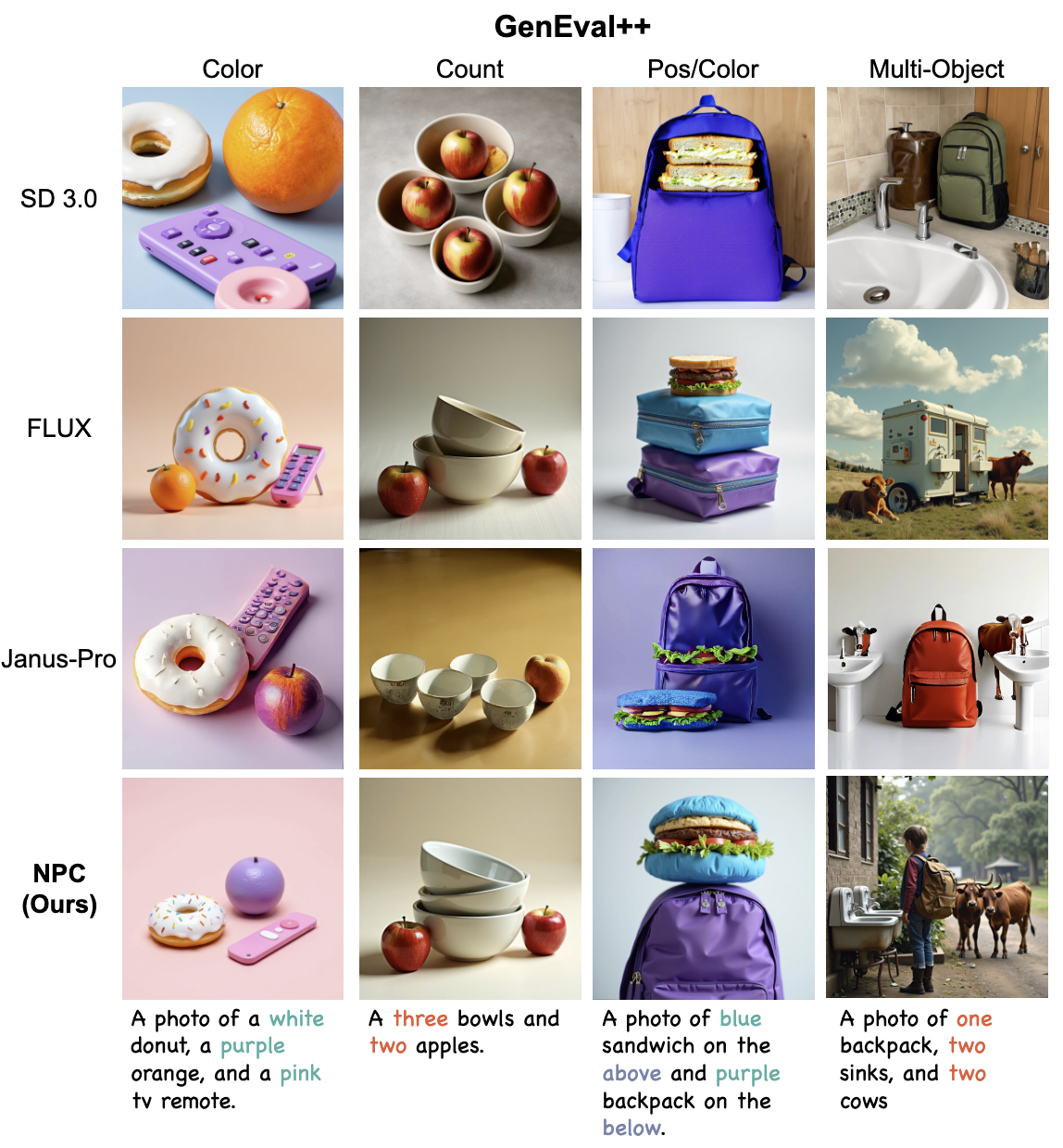

We introduce Negative Prompting for Image Correction (NPC), an automated pipeline for using negative prompts to enhance image-text alignment.

The IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), 2026

We propose the Style-friendly SNR sampler, which aggressively shifts the signal-to-noise ratio (SNR) distribution toward higher noise levels during fine-tuning to focus on noise levels where stylistic features emerge.

Neural Information Processing Systems (NeurIPS)

Selected as a WINNER in Qualcomm Innovation Fellowship Korea (QIFK) 2025 (Link)

We propose RePIC, a reinforced post-training framework that outperforms SFT-based methods in multi-concept personalized image captioning by enhancing visual recognition and generalization through reward templates and curated instructions.

British Machine Vision Conference (BMVC)

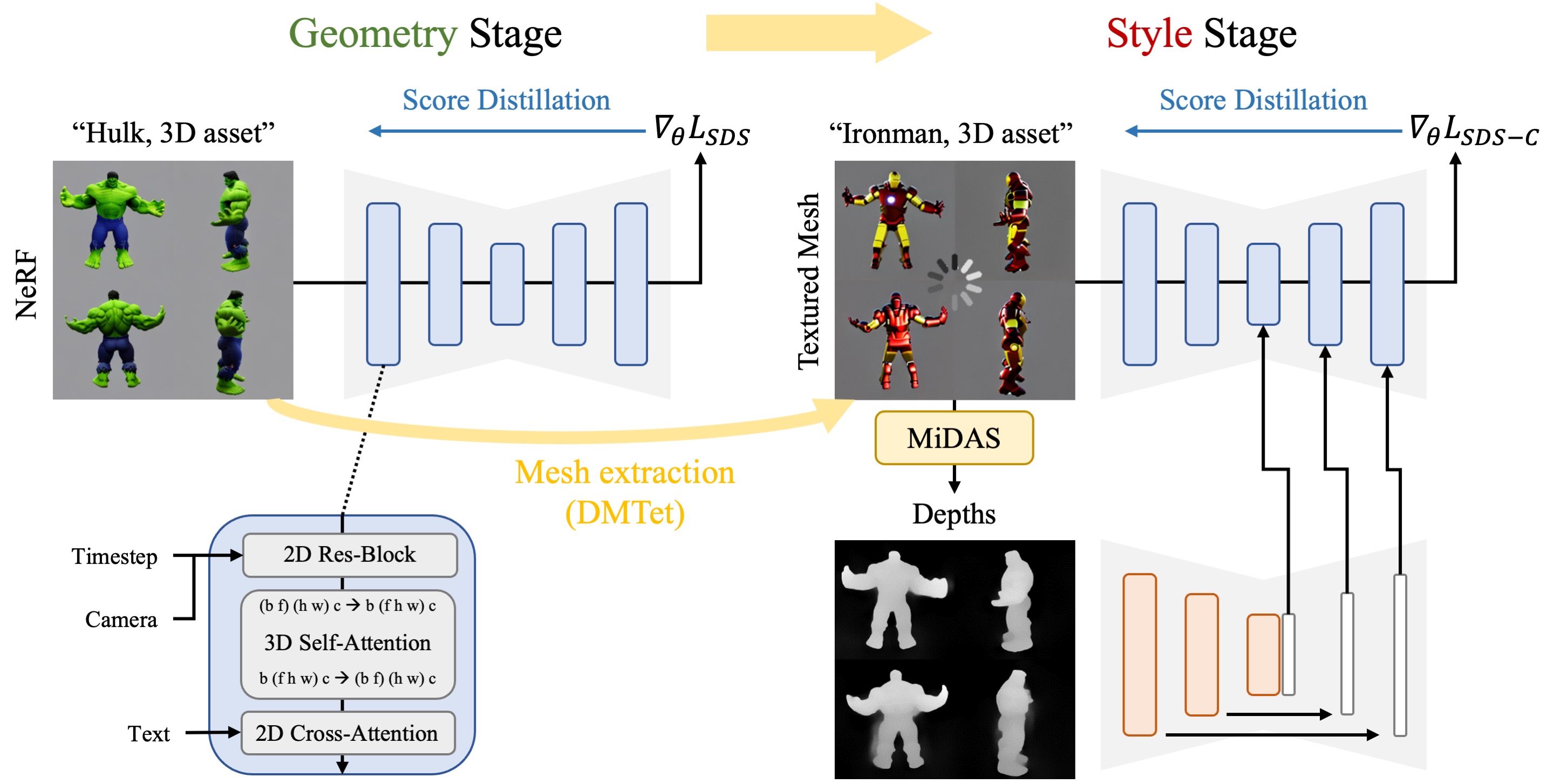

ControlDreamer enables high-quality 3D generation with creative geometry and styles via multi-view ControlNet.

European Conference on Computer Vision (ECCV)

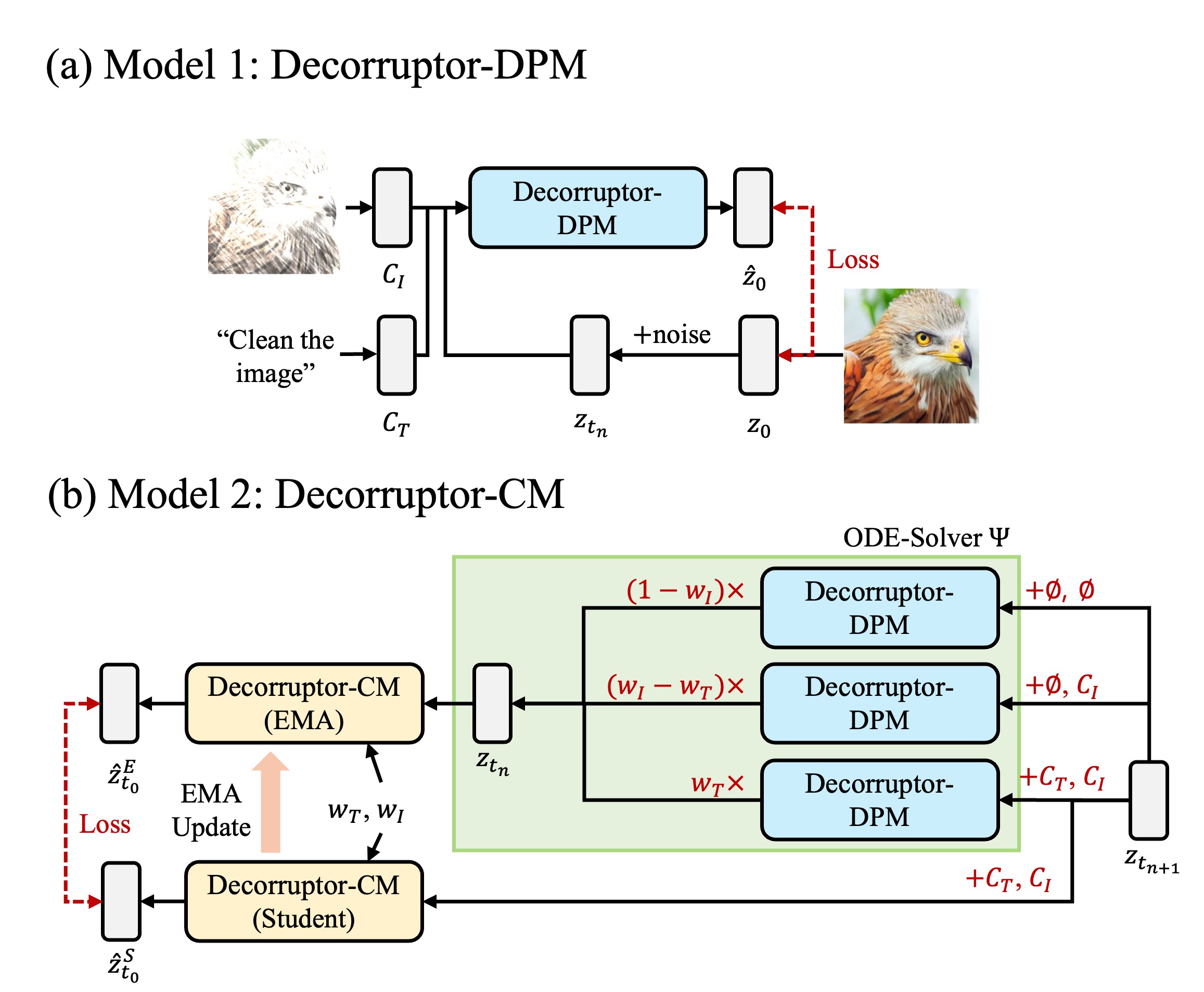

We propose Decorruptor to enhance the robustness of the diffusion model and accelerate the diffusion-based image-level updates.

International Journal of Computer Vision (IJCV), IF: 11.6

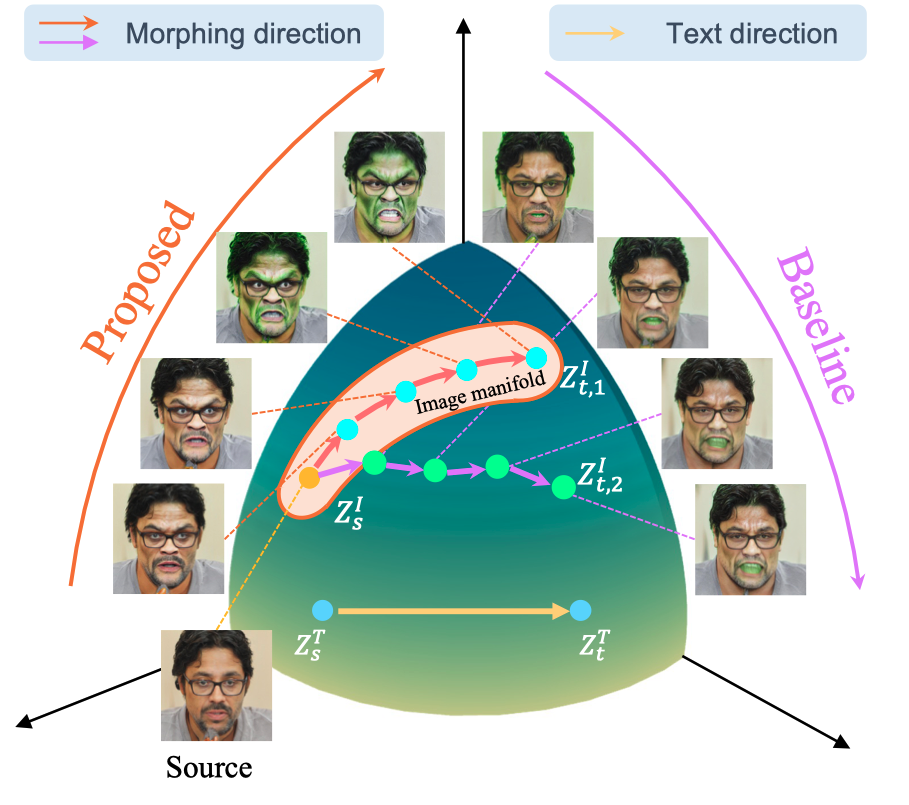

We have enhanced a range of CLIP-guided image morphing baselines through the implementation of our proposed inter- and intra-modality regularization losses.

ISA Transactions, IF: 5.9

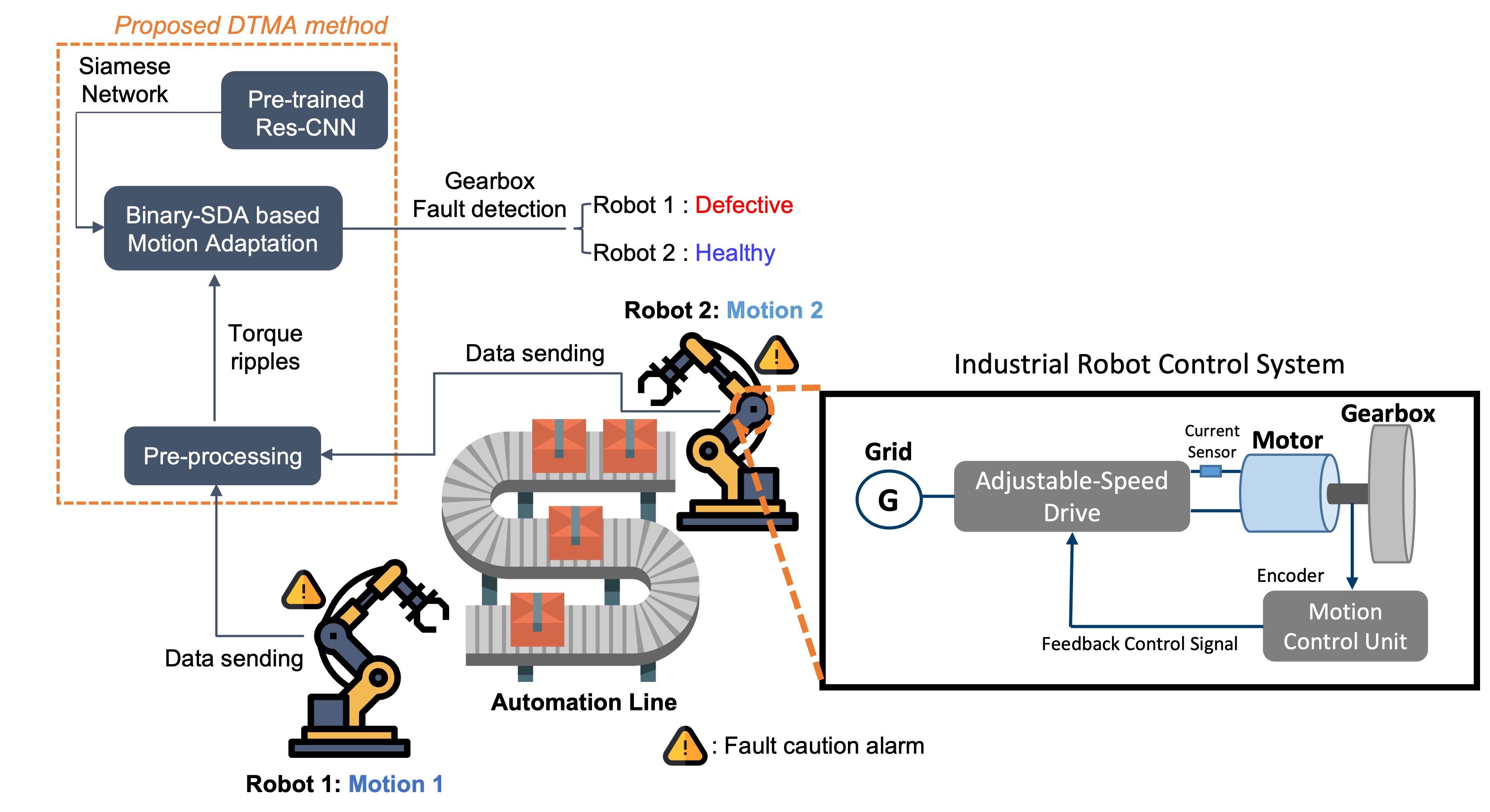

We present a deep learning-based motion-adaptive fault detection method for industrial robots using torque ripples.